by Rachel Simmons and Bruce Wang

Last month, registration opened for Amazon Web Services’s (AWS) re:Invent, billed as the largest gathering of the “global cloud computing community.”

On the splashy conference website, video imagery zooms energetically through the Vegas skyline, through spinning DJ’s and confetti, and hotel ballrooms packed with people. It promises technical deep dives on topics like the Internet of Things, alongside “quirky after hours experiences” like a wing eating contest and a 5k. Passes for the week cost $1,600.

With our daily lives consumed by Netflix, Instagram, and Google Docs, you’d be excused for forgetting that merely a decade ago, there was no “global cloud computing community.” Though we’d come far in 2006 from the days when computers themselves filled entire rooms, our mounting mass of digital data and files had to be stored on expensive, physical servers-and the more files and storage needs you had, the more servers you needed, housed in warehouse-sized data centers built solely to keep and maintain them.

Moreover, it was tricky to plan for how much storage you would need down the road, be it with sip trunk providers who needed extra space for their call services or other means, so companies inevitably paid in advance for storage that would be used far later, or sometimes, never at all-a cash flow complication that troubled everyone from small startups to large corporations.

Even Amazon, the world’s largest online retailer, was struggling with the issue of scaling alongside hardware costs as they continued to build their business. When they created a technology internally that enabled them to store vast amounts of computer data without setting up hardware, they also recognized that if they needed that technology, so too, might the rest of the world. This is done from using a virtual data room platform make this solution possible.

“Whether you are running applications that share photos to millions of mobile users or you’re supporting the critical operations of your business, the “cloud” provides rapid access to flexible and low cost IT resources. With cloud computing, you don’t need to make large upfront investments in hardware and spend a lot of time on the heavy lifting of managing that hardware. Instead, you can provision exactly the right type and size of computing resources you need…You can access as many resources as you need, almost instantly, and only pay for what you use.”

-Amazon Web Services, What is Cloud Computing

Now, Amazon has become one of the biggest companies for a reason. They are excellent at business and there’s a reason so many people buy amazon shares uk. However, this doesn’t mean they haven’t made mistakes on the way. The tech giants of the word like Amazon were slow to adapt to the ethereally-named “cloud”, as giants often are. They called the new service “crappy” and stuck to their known solution: expensive, but familiar data centers. Instead, the early market for the cloud was startups-the tradeoff for reliability and what was a perceived lack of security being low-costs that enabled them to build and scale their businesses on bootstraps, acquiring data storage space as they needed it, versus paying upfront for a large amount of storage they might never use.

The Cloud Revolution

As put succinctly by the burlish Erlich Bachman (HBO’s Silicon Valley), the cloud [was] “…this crummy service, that’s becoming more important, that’s probably the future of computing.”

You see, the tenant of a healthy ecosystem is that members of the system have equally important roles to play in their symbiotic relationship. So what happened this past decade was a revolution that fed on itself: AWS supplied a super-low-cost infrastructure, then continued to improve it, delivering value at a breakneck pace. AWS partners-both companies and individual developers-built services on top of the cloud, developing new business models in the Platform-as-a-Service and Software-as-a-Service.

Their solutions made it even faster to build more new services, so stacking and layering of solutions accelerated the entire revolution. Cloud services are extremely commonplace now in a multitude of industries, including things like CMMS software which help businesses to operate more efficiently by organizing, planning, tracking, and simplifying maintenance operations. Such tasks can be very demanding which is why businesses look to solutions like this and other cloud-based services.

And it fed a “decade of lean, capital-efficient startups,” says Sam Lessin for The Information. “The intersection of good open-source software, infrastructure as a service [i.e. AWS], inexpensive distribution, and some plug-and-play monetization like Google’s AdWords or an app store put all the power in the hands of small technical teams.” AWS had created an ecosystem that allowed-no, spurred-innovation to thrive.

AWS’s own growth looked something like this: in 2010, Netflix made the controversial move to build on top of AWS; in 2012, startup DeepField Networks analyzed anonymous network traffic in North America and found that one-third of those millions of users visited a website built on Amazon’s cloud infrastructure each day; in 2014, the CIA moved a large part of their computing demand to AWS (beating out IBM, whose wide use within the U.S. government didn’t make up for a less-impressive cloud offering at the time). In 2016, AWS’s collection of customers has expanded to include Intuit, Time Inc., Juniper, the Guardian, and Hertz. Last year, AWS was estimated to have a public cloud ten times larger than its next 14 competitors (including IBM, Google, and Microsoft) combined. The continued partner growth for AWS has propelled it to hit $2.57 billion in revenue in Q1 2016, and become the biggest source of Amazon’s overall profits.

But now, in 2016, with cloud infrastructure folded into the fabric of the internet, business, government, and our lives, what’s next?

Says Lessin, “Open-source software and infrastructure as a service will remain cheap forever. But the low-hanging fruit of highly scalable software startups has mostly been eaten.

The next big opportunities seem to be shaping up around things like self-driving cars, on-demand services, VR, bots, bio, drones. But such opportunities lack turnkey generic infrastructure which enables development costs to drop close to zero. They are all expensive games in which to participate.”

Why are we talking about this?

Because Pokemon Go.

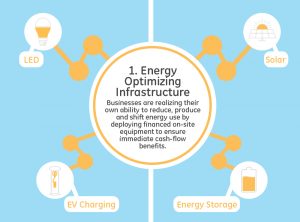

We kid. No, it’s because this essential idea-that of infrastructure supporting an ecosystem of low costs and innovation-is no less true in energy right now. Brightergy has been in the solar generation game since 2010 and we’ve watched our clients-both for- and non-profit-grasp the growing accessibility of choice and control over their own energy use, by generating a portion or all of their needs on-site, usually employing financing as a means to either remove upfront costs or greatly decrease years to payback.

Brightergy team members installing a solar array on top of our headquarters in downtown Kansas City

Next, LED lights and other efficiency technologies became attainable in cost, and when paired with sensors, energy consumption due to lighting (the largest source of electricity consumption in the U.S. commercial market, according to the U.S. Department of Energy) can be tracked, measured, and controlled, alongside other business metrics.

We’re now at a point where it’s possible to start building an energy strategy by tracking energy data in real-time with energy management software, and then use insights gained from those analytics to invest in projects and services that reduce energy consumption, then add on-site generation.

This is a shift in the role of energy from cost-a bill that’s paid at the end of the month, with no control over the amount-to an asset.

It’s also a shift with an intersection of its own: the growth of the cloud, and subsequently, the Internet-of-Things-devices that can monitor energy consumption on breaker boxes, lights, plugs, and more and then report that data over the Internet-and rising energy consumption and costs.

Traditionally, energy companies have been large and slow-moving. Their infrastructure-power plants, substations, “poles and wires,” etc.-is now vast and aging, requiring more and more capital to upkeep and replace, which in turn, is contributing to rate increases. Many utilities are heavily regulated, and are also compensated for the amount of infrastructure they build-a holdover from when we were first accessing electricity in our homes and businesses, and the industry was building its own economies of scale. And then there are the challenges of adapting to new and emerging technologies and their collective influence on the grid.

As the utility industry looks to figure out what the next business model will be and how they’ll get there, a window has opened to companies, new and old, who cater to helping other businesses reimagine energy as something to be managed strategically. Similarly to the cloud revolution spurred by Amazon and AWS, to keep moving forward towards escape of the electricity-as-simply-a-bill-you-pay mentality, energy will need to grow the symbiotic relationships born from larger companies providing accessible infrastructure that in turn encourages smaller and nimble companies to innovate and build on top of it.

One of the keys to AWS’s success, after all, was the self-feeding system it created, and the rapid execution required to keep up-what Amazon calls the “virtuous cycle:”

“The basic game plan for all cloud-computing vendors is to make these clouds [Infrastructure-as-a-Service and Platform-as-a-Service] to both independent software developers and big companies. Developers might dip their toe in the water with a single app-but as their business grows, so will their usage of the cloud. The more customers a cloud platform gets, the more servers it can afford to add. The more servers they have, the better they can take advantage of economies of scale, and offer customers lower prices for more robust features with more enterprise appeal. They lower their prices and the better their products, the more customers they get, and the more new customers switch over to the cloud.”

–Business Insider, Why Amazon is So Hard to Topple in the Cloud

Energy-as-a-Service

Image via GE Current

What the energy industry needs, more specifically, is an infrastructure that centralizes and manages all of these different technologies, hardware and software. Says Maryrose Sylvester, CEO & President of Current, a startup within GE, “Companies need to manage all this complexity with scalable, holistic solutions that can keep pace with change while making them greener, more efficient, and more nimble. And they need to do it without breaking the bank. Only one way offers these benefits: energy-as-a-service.”

The idea builds on the as-a-service model brought forth by AWS and the cloud: simple, scalable, easily-updateable solutions that lower costs to its partners and shift the maintenance burden to the provider. “Supplemented by the Industrial Internet [Internet of Things], energy-as-a-service helps the enterprise continuously improve energy management over time with new sensors and software to meet changing needs-and companies can pay for that service on an ongoing basis based on use, instead of committing to an array of technology capabilities that might not meet long-term needs,” says Sylvester.

“This model,” she continues, “is also ripe for partnership, and where there is partnership, there is money, competition, and opportunity-a thriving ecosystem-all driving innovation.”

In another ten years, will we be hopping in our self-driving cars on our way to the largest gathering of the “energy cloud community,” where we’ll no doubt participate in “quirky after hours events” of our own (karaoke ping pong?) and attend breakouts on new solar applications and infrastructure security? For her part, Sylvester imagines, among many potential applications, “a hospital, where sensors could track medical equipment, sanitation practices by medical personnel, and send personalized health- or prescription-related information to a patient.”

With the right mix of partners, large and small, and the right infrastructure, the possibilities are imaginatively endless.